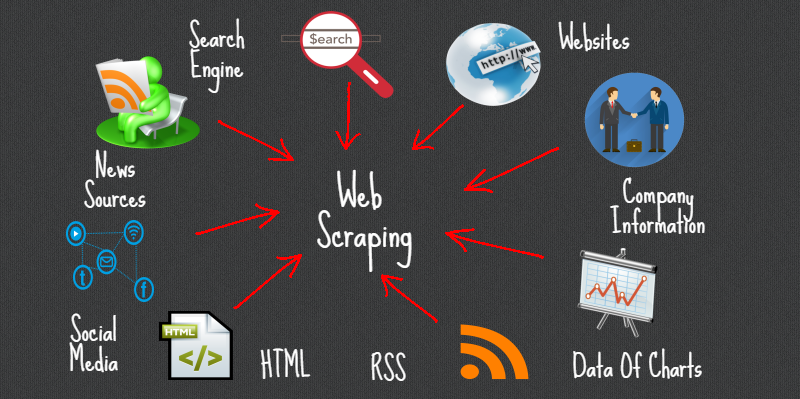

Web scraping has become an essential tool for extracting valuable data from websites, helping businesses, researchers, and developers gather insights for various purposes. However, beginners often make mistakes that can lead to inefficiencies, errors, or even legal trouble. While web scraping can be incredibly powerful, it’s important to approach it with HTML to PDF API the right knowledge and best practices to ensure success. This article highlights the top 10 web scraping mistakes beginners should avoid, providing tips to help you scrape websites effectively and ethically.

1. Ignoring Website Terms of Service

One of the most common mistakes beginners make when scraping data from websites is ignoring the website’s terms of service (TOS). Most websites have legal guidelines that prohibit or restrict automated data extraction. Violating these terms can result in your IP being blocked, or worse, legal actions being taken against you. Before scraping any site, it’s essential to read and understand the TOS to ensure that your scraping activities comply with the website’s rules. In many cases, websites may provide public APIs as an alternative to scraping, which can be a safer and more efficient way to gather data without breaking any rules.

2. Overloading the Server with Too Many Requests

Another mistake that many beginners make is sending too many requests to a website in a short period. When you scrape a site, each request you send to the server can place strain on the website’s infrastructure. If you overwhelm the server with too many requests at once, it can result in the server crashing or your IP being temporarily or permanently blocked. To avoid this, beginners should implement rate-limiting in their scraping scripts, ensuring that the requests are spaced out at reasonable intervals. Additionally, using a user-agent rotation or proxies can help disguise the scraping activity and avoid overloading the server.

3. Failing to Handle Dynamic Content

Many modern websites use JavaScript to load content dynamically, meaning the data you need is not initially visible when the page is first loaded. Beginners often make the mistake of scraping only the visible HTML content, which may not include the dynamic data loaded after the page has fully rendered. To handle dynamic content effectively, you need to either use tools like Selenium or Puppeteer to render JavaScript and capture the required data or look for the relevant API endpoints that supply the data in a structured format. Failing to account for dynamic content can lead to incomplete or incorrect data extraction.

4. Scraping Too Much Data at Once

Newcomers to web scraping often make the mistake of scraping an entire website or an excessive amount of data all at once. While this may seem efficient, it can quickly lead to errors, data overload, and slower processing. It’s better to break down the scraping task into smaller, manageable chunks. By limiting the scope of your scraping activities, you can focus on specific pages or sections of the website and reduce the chances of overwhelming your system or violating a website’s TOS. Additionally, scraping in smaller batches can help with data quality, allowing you to verify and clean data before moving on to the next batch.

5. Not Cleaning or Structuring the Data Properly

After scraping data from a website, the next step is to clean and structure the data. Beginners often overlook this crucial step, resulting in messy data that is difficult to analyze or use. Raw data from websites may contain irrelevant information, inconsistencies, or missing values that can affect the accuracy of your analysis. It’s important to clean and structure the data using libraries like Pandas in Python to remove duplicates, fill in missing values, and convert the data into a more useful format such as a CSV or Excel file. Organizing and cleaning your data ensures that it can be used effectively for analysis or business purposes.

6. Failing to Use Proxies or VPNs

Web scraping without proxies or a VPN can lead to your IP address being blocked by the target website, especially if you are scraping at scale. When too many requests are made from the same IP address, websites may detect this as suspicious activity and block access. Using proxies or rotating IP addresses can help distribute the requests, reducing the risk of detection and ensuring that your scraping activities remain undisturbed. Beginners often overlook the importance of proxies, thinking that scraping from a single IP address will not pose a problem, but this can quickly lead to blocked access, especially on popular websites.

7. Not Handling Captchas

Many websites employ CAPTCHAs to prevent automated scraping. These challenges require human interaction to verify that the user is not a bot. Beginners often struggle with CAPTCHAs and either fail to bypass them or resort to unethical practices, such as manually solving CAPTCHAs or using unethical CAPTCHA-solving services. Instead, beginners should explore legitimate options such as using CAPTCHA-solving services like 2Captcha or automating CAPTCHA bypassing with advanced tools like Selenium that can interact with CAPTCHA challenges or load the necessary data in a way that bypasses them.

8. Overlooking Data Storage and Backup

Once data is scraped, it needs to be stored in an appropriate format for future analysis or processing. Beginners often overlook the importance of data storage and backup, leading to data loss or difficulty in managing large datasets. It’s crucial to save scraped data in structured formats, such as CSV, JSON, or SQL databases, depending on the volume and type of data. Additionally, make regular backups of your scraped data to avoid losing critical information due to server failures, disk errors, or accidental deletion.

9. Not Being Prepared for Website Changes

Websites are constantly being updated, and a structure that works today may not work tomorrow. Beginners often forget to account for potential changes in a website’s HTML structure, which can break their scraping scripts. For instance, if a website updates its CSS selectors, tags, or page layout, your scraping script might fail to extract the correct data. To minimize this risk, it’s essential to write flexible and robust code that can handle minor changes in structure. Regularly testing your scraping scripts and using tools like BeautifulSoup or XPath can help identify any issues caused by site changes, allowing you to adapt quickly.

10. Ignoring Ethical Considerations

Finally, one of the most significant mistakes beginners make is not considering the ethical implications of web scraping. Scraping websites without permission or scraping sensitive or private information can be illegal or unethical. Beginners should always ensure that they are scraping publicly available data and respecting privacy laws, such as GDPR in the European Union or CCPA in California. Additionally, ethical scraping includes being mindful of the impact your activities have on the target website and avoiding actions that could harm its operations or other users.

In conclusion, while web scraping can be a powerful tool, it’s essential for beginners to avoid common mistakes that can lead to technical, legal, or ethical problems. By taking the time to understand the rules, properly handling data, respecting website policies, and using best practices, you can scrape data more effectively and ensure that your scraping efforts are both productive and responsible. Remember to always test your code, use appropriate tools, and stay informed about any changes in the websites you are scraping to avoid complications. With these best practices in mind, web scraping can be a highly rewarding and efficient way to extract valuable insights.